An Architecture for Ambient Computing

Overview

Over the past few years, the LIMSI and the Laboratoire Aimé Cotton have been developing an electronic locomotion assistance device for the blind, called the Télétact [1] (fig. 1). The system uses a laser telemeter to measure the distances to obstacles and transforms them into tactile vibrations or musical notes (the higher the tone, the closer the obstacle). Thus, users are aware of the presence of obstacles in advance, so they can anticipate movements, and have more fluent trajectories.

The system has been used by a few dozens blind people on a daily basis, and thus has proved to be very useful. However, if it performs well in known environments, it is not able to guide people when visiting unknown environments. What blind people need is contextual information about visited places, depending on their goals. For instance, blind people would benefit from guidance information when trying to locate a given room in an office building [2, 3]. Moreover, in some places, availability of dynamic information is crucial for people to find their way: in an airport, passengers need personalized information about their flight.

This of course applies to blind people, but actually to sighted people as well. Indeed, in airports, if overhead information screens certainly hold information relevant to people, they are hard to read because information relevant to people nearby is dissiminated among a great many irrelevant information items.

Solutions

For the reasons stated above, we aim at designing a context-aware, goal-driven architecture for public places which will enable people to interact with their environments, so as to obtain personalized relevant information. Interaction can be multimodal, and take users' capabilities into account. This way, departure times and boarding desks' locations could for instance be delivered to sighted people through public screens at proximity, while being delivered to blind people through vocal messages sent to their cellular phones.

To provide context-aware information, one first has to capture context. For this reason, we have proposed a simple architecture to acquire context information by aggregating data from sensor input (e.g. RFID receivers, video signals) into abstract context information (e.g. people's locations). This component-based platform constitutes the interface between the real world and the rest of the system. It features minimal coupling between context capture and context usage in applications and performs consistency checks [4].

On top of this facility, we then propose a location-aware agent-based framework, in which agents represent both human beings and interactive devices (for instance, information screens meant to provide information about flights in airports, but also office doors, meant to give details about the office owner's public schedule). Agents are aware of the proximity of other agents, which enables them to initiate interaction.

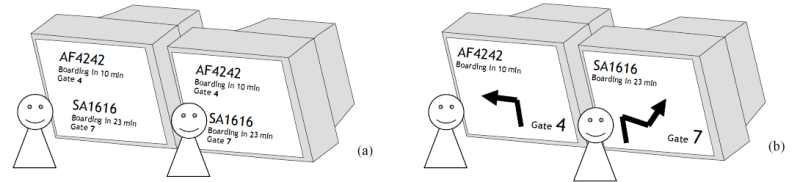

Using this framework, we have built an application in which public information screens in an airport display only information relevant to passengers located nearby. Moreover, close screens can dynamically collaborate to avoid redundancy of information displayed, this without prior knowledge of each other (fig. 2).

Each user is supposed to be interested in one particular information item called her semantic unit (s.u.). Neighboring screens will try to display her s.u., while abiding by the following set of constraints:

- Completeness: when a user is close to a number of displays, her s.u. must be provided by (at least) one of these devices,

- Stability: a user's s.u. must not move from one display to another, unless the user herself has moved,

- Real estate optimization: to prevent devices from being overloaded, s.u. duplication must be avoided whenever possible. This means that the total number of displayed s.u.'s must be minimal.

For usability reasons, we consider that Constraint 1 is stronger than Constraint 2, which in turn is stronger than Constraint 3. For instance, screen real estate will often not be absolutely optimized, so as to preserve display stability. In turn, when a screen becomes saturated, some of its s.u. will be migrated elsewhere, so as to display a new s.u., thus contravening the stability constraint in favor of the completeness constraint.

Screen display is managed by a distributed incremental algorithm that enforces these constraints.

In this system, information screens exploit one modality only (visual modality), but we have also devised algorithms for choosing modality in multimodal output devices. For instance, a door may be able to provide a static information item in the form of a written label (textual modality), as well as dynamic information through a speech synthesis device (audio modality). Depending on the capabilities and preferences of the human agent coming by (who may be sighted, blind or deaf), the door's agent will choose a suitable modality.

Perspectives

Test implementations of the aforementioned frameworks have been realized. Integration, real-scale implementations and tests will be performed over the next few months. User evaluations will help tune and improve the algorithms.

One of the applications of our architecture will be a context-aware locomotion assistance device for the blind as evoked in introduction, able to provide users with semantic information about visited places [5].

At the moment, user goals are implicit (they are not distinct from the algorithms). It should be interesting to model them, along with other characteristic of user profiles and preferences.